Why Should You Manage Your Log Ingestion?

Cost Implications

SIEM tools operate under a licensing model that typically charges based on the volume of logs ingested. Ingesting a high volume of irrelevant, duplicate or less significant logs can escalate costs exponentially. These unnecessary logs add time to every query run as well as increasing costs, without contributing meaningful insights into the security landscape.

Alert Fatigue

Ingesting logs indiscriminately can result in delays for analysts running queries in the event of an incident if the data is not parsed effectively as well as creating confusing if duplicate records are present as an analyst tries to work out exactly what the logs are showing. This can lead to duplication of effort by analysts as well as wasted time trying to parse data on the fly.

System Overload

SIEM tools have limits to how much data they can process efficiently. Flooding the system with too many logs can lead to delays, performance degradation, and even system failures, making it challenging to identify and respond to threats in real time. Also while cloud SIEM solutions such as microsoft sentinel do not currently charge for poor query performance directly (although they do ‘throttle bad queries’) its likely a matter of time before they decide it would be a new revenue stream.

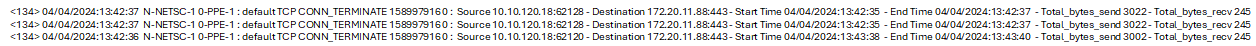

Initial Log Data

Lets take some basic sample log data as shown below

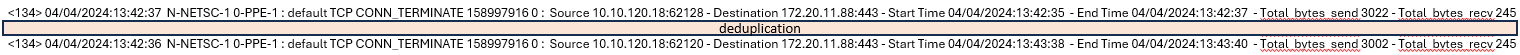

Deduplication

Deduplicating or aggregating data is where you may be ingestion the same data multiple times as a packet of traffic flows through multiple network devices submitted traffic to the SIEM or where multiple security controls are submitting the same data such as Defender for endpoint and web filtering software may show users browsing data.

While the below shows an unrealistic 33% of data is not needed, standard network devices can duplicate between 5 and 20% of data in a large environment. The larger the environment the more likely duplicated data will exist. Check your storage use to see if these potential savings are worthwhile

Some log collectors allow for pure deduplication or aggregation at collection such as logstash, cribl or nifi.

Normalisation or Parsing

Normalising or parsing is where at collection only the data that is required is stored and stored in appropriate columns i.e. source IP addresses are stored in the SourceIP field. Some SIEMs will make it easy to ingest data into a single mass field and parse it on the fly with inbuilt parsers every time a query is run. This not only has performance implications but every part of the logs is stlil held and costing you money. In the example below 38% of the data can be dropped (not counting the duplicate row in the middle). Depending on the log format you can reduce storage amounts by greater than 50% by parsing and only storing the amount you need.

Most log collectors will allow you to parse, filter data on ingestion before sending to the SIEM while other options such as data collection rules (DCR) in sentinel can parse data in azure before it goes into the sentinel tables (though DCRs can be user unfriendly)